Earlier this year CML released the EV6550DHAT, a HAT (Hardware Attached on Top) based CODEC and PA solution for the Raspberry Pi2, 3 or 4 single-board computers. This board was made available with a GUI interface that runs natively on the Raspberry Pi enabling quick and easy demonstration of the CMX655D Ultra Low Power Voice codec.

The GUI interface is functional and is made available with a software API (Application Programmer’s Interface) that allows the user to control the EV6550DHAT via the Raspberry Pi GPIO interface connector. However the GUI does not explicitly support third-party open-source audio applications of which there are many available for the Raspberry Pi.

This blog introduces the recently-released Advanced Linux Sound Architecture (ALSA) software driver that enables easy integration of the EV6550DHAT to other compatible applications.

Raspbian, the popular Linux based Raspberry Pi OS supports ALSA, which is a software framework that provides an open API for third-party sound card device drivers. As part of the Raspbian kernel it is easily adopted and adaptable for use by both audio hardware and software developers alike.

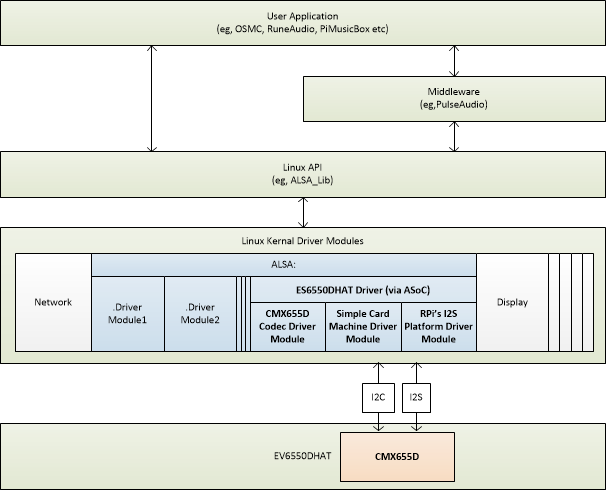

The EV6550DHAT ALSA driver uses the Raspberry Pi I2S and I2C interfaces to support both 16-bit audio samples and CMX655D codec control respectively. As the diagram below indicates, there are three driver modules included in this release that make up the ‘CMX655D sound card’ which is resident within the Raspberry Pi ALSA Kernel framework.

An installation package is available from CMLmicro.com which allows for a quick installation. It now becomes possible to use the EV6550DHAT as a functional sound card.

The journey starts here…

For this exercise I tested a number of applications that were audio specific to see how they performed with this new hardware and now, with the availability of ALSA driver, it should not be difficult.

I started with the alsamixer application, accessible through the Raspbian command line interface or terminal, type ’alsamixer’. Thankfully, this works straight out of the “box” with no modification. The CMX655D then appears as a valid sound card option that can be selected as such.

I should mention at this point that a suitable 4 to 8 ohm speaker must be attached to the EV6550DHAT for it and the ALSA driver to be demonstrated. Purely for convenience sake, I used a regular Bluetooth speaker with line input for this test and found that it worked a treat.

As a simple test, I’d suggest that you try playing a .wav audio file using alsa. From the terminal just type ‘aplay /home/pi/’MyTune.wav’. Where ‘MyTune.wav’ is a suitable audio file already saved to the home location.

Through alsamixer, you can access the CMX655D API controls and make changes to the CMX655D encode and decode channels. So at this point I’d recommend that you grab a copy of the latest CMX655D datasheet from cmlmicro.com if you need to modify these parameters.

Next up I looked at Audacity. This is an excellent audio editing app that’s been around for years and is respected by both amateurs and professionals alike. It’s also easy to install and use. Other than a quick check of the microphone and speaker settings, it worked well. Both record and playback worked well and I found that I could clearly discern the differences to the quality of the audio with different CMX655D sample rates.

I turned to PulseAudio next. This app is a general-purpose sound server that sits between the installed apps and the ALSA kernel. It automatically detects what sound cards are available and mixes together multiple audio streams and routes them to the selected target. This is very useful when you have a number of audio-based apps running as without its use there will be sound card contention issues.

I found that life was a little easier once I’d installed ‘pavucontrol’ – this provides a useful GUI for PulseAudio, making it much easier to adjust audio levels and to see how the audio is being routing.

Now things got fun! I installed FluidSynth, which is a multi-timbral software synthesizer that’s command line driven. I then loaded QSynth – this acts as a GUI for FluidSynth. Both apps are best used with a MIDI controller. For my purposes, I loaded VMPK (Virtual MIDI Piano Keyboard) and got stuck in. Using the selected general MIDI interface I can happily confirm that it performs very well.

During my testing I selected the PulseAudio driver along with a sample rate of 48000bps, buffer size and count of 512 and 4 respectively. They seemed to offer the best balance of quality vs latency on a raspberry Pi 3B. Results, as expected, were very good despite my musical skills – but I’m working on that!

So, I’ll set aside the musical aspirations for now and move on to what is, for me, the most interesting application for the Raspberry Pi and EV6550DHAT – and perhaps its trump card – its potential use as a smart speaker for home use. Now there are a number of solutions out there from Amazon, Google and other lesser-known third-party sources such as Mycroft, so a wide choice. For this test though I chose Google Assistant as it is relatively lightweight and can be accessed through a Python-based SDK. If you fancy trying this yourself, you’ll need to set up a Google Developer’s account and Project ID in order to use Google Assistant with the Raspberry Pi. I’ll explain this.

I would advise that you follow the instructions direct from the Google Developer’s site, developers.google.com or more recent web explanations. I found that there is inaccurate and out-of-date information available on third-party sites. This made the installation process quite confusing – not what I wanted! The installation is multipart as the Raspberry Pi acts only as router for the audio and control; the real hard work is performed in the Googles servers and they need to talk to each other over a secure channel.

So, in very simplified terms, once registered online in the google developer’s site, you will need to go through the following steps:

- Register and setup a project through the Google Actions Console, console.actions.google.com. From here a ’New Project’ can be added.

- Go to Google Developers Console so that the Google Embedded Assistant API can be enabled.

- Return to the Google Actions Console and register the Raspberry Pi, making sure the device model ID is recorded. You’ll then be able to download a credentials file to allow the Raspberry Pi to communicate with the Google server.

- Configure the OAuth credentials/consent screen and save.

- Finally, the activity controls are set (web/app/voice activity and device activity and location).

Return to the Raspberry Pi and:

- Set up a credentials.json containing the contents of a downloaded credentials file in a newly created /googleassistant/ folder.

- To set up and enable the necessary Python 3 virtual environment, a set of software dependencies will also likely be required.

- Python PIP is next loaded so that Google Assistant Library, SDK[samples] and oauthlib[tool] modules can be installed.

- The oauthlib tool is run and subsequent exchange of authentication code via the URL provide.

- Finally make sure during the next Google agreement stage you are using the correct Device and Project ID names.

And that’s about it. Sounds simple? – Well, I make no apologies for the brevity of the above steps but for the complete process go online, read, type carefully and take your time.

I’ll also add that full function Google Assistant support is not available as this is a non-commercial project. However it does allow you to test interaction with Google Assistant and try some simple tests. Note that when tested the “wake on a key word” function is not supported but push-to-talk is.

Try:

‘source enc/bin/activate’

‘googlesamples-asssistant-pushtotalk –project-id <The project ID> –device-model-id <The device ID>’

Press return and test.

Other options are available via:

‘googlesamples-asssistant-pushtotalk –help’

And tools via:

‘Googlesamples-assistant-devicetool –help’

Within the Python virtual environment and through PulseAudio and the new EV6550DHAT ALSA driver results were very good; certainly a convenient solution that produces excellent results.

In conclusion, this has been a fascinating exercise and one that I’ve thoroughly enjoyed. The EV6550DHAT and its GUI has been around for 6 months, and although modification and integration with the supplied software is possible today, it is not as convenient as it could be… this all changes with the introduction of the ALSA driver. It offers the ability to transform the EV6550DHAT into a flexible, fully functional tool in its own right and in the process, makes it much easier to evaluate the HAT and the CMX655D combination.